Demo Videos

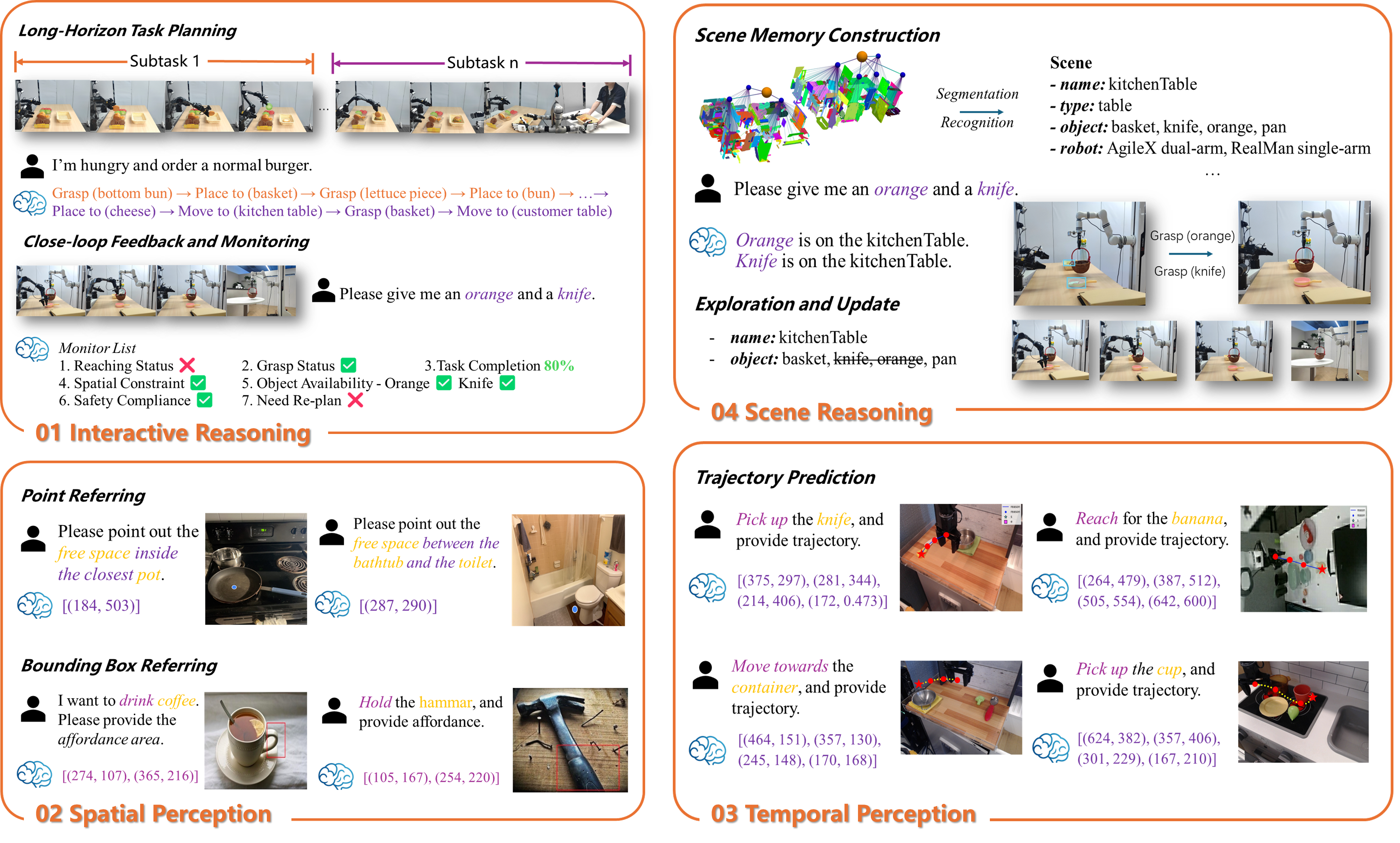

System Stability

This video demonstrates the model's referential ability in color recognition and its stability in continuous operation.

Real-time Scene Adaptation

This video demonstrate the model's rapid scene adaptation ability and its capability to judge object proximity, recognize orientation, and determine distance.

Real-time Voice Interruption Adjustment

This video demonstrates the model's capabilities in object spatial relationship recognition, multi-step reasoning, rapid interactive reasoning, and real-time interruption adjustment.

Part-level Orientation-related Referring

This video demonstrates the model's capabilities in object spatial height recognition and part-level orientation-related region identification.

Functionality-oriented Referring

This video demonstrating the model's capabilities in object spatial height recognition and illuminated area identification.

Multi-step Spatial Referring with Reasoning

This video demonstrates the model's object spatial relationship recognition and multi-step spaital referring with reasoning capability.

Structured Arrangement

This video demonstrates the model's ability to understand spatial relationships and pattern reasoning between objects.

Mobile Manipulation

This video demonstrates the model's ability to control a humanoid for both tabletop object manipulation and indoor navigation.

Object Attribute Recognition

This video demonstrates the model's ability to accurately recognize and differentiate objects by their sizes and its stability in continuous operation.

Object Affordance Localization

This video demonstrates the model's capability in object affordance prediction (grasping the handle of the mug) as well as locating objects based on their colors and distances.

Spatial Relations Reasoning

This video demonstrates the model's spatial reasoning capabilities, including distance perception (nearest), position awareness (left and front), and free space localization.

Spatial Referencing and Vacancy Detection

This video demonstrates the model's object referencing capability based on spatial relations and its ability to locate vacant areas in 3D space.